I am a Principal Scientist Manager at Microsoft (since 2021) - based in Cambridge (UK) - where I lead a team working on real time perception and generation of human motion for remote communication in 2D and Mixed Reality. Our work at Microsoft has been shipped in several products including Teams and Microsoft Mesh.

Up to 2021 I was a full time Professor Computer Science at the University of Bath, where I joined as a Royal Academy of Engineering/ EPSRC Research Fellow in 2007 and still hold a part-time position. I have been fortunate enough to be awarded two previous Research Fellowships: Royal Academy of Engineering, 2007-2012, Royal Society Industry Fellowship (with Double Negative Visual Effects), 2012-2016.

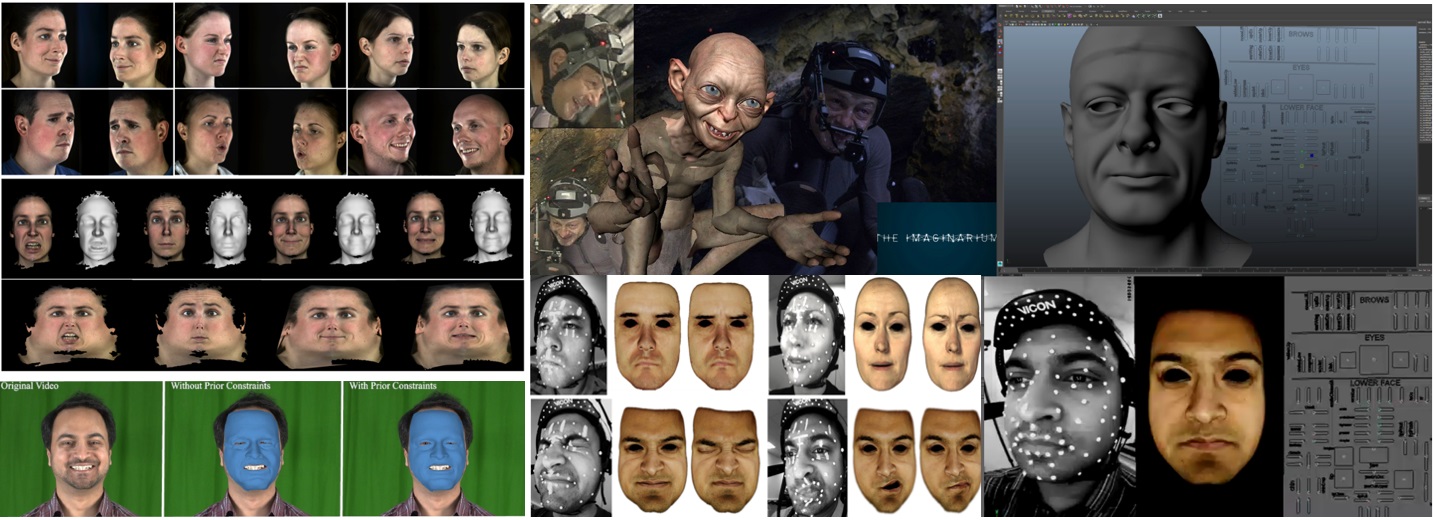

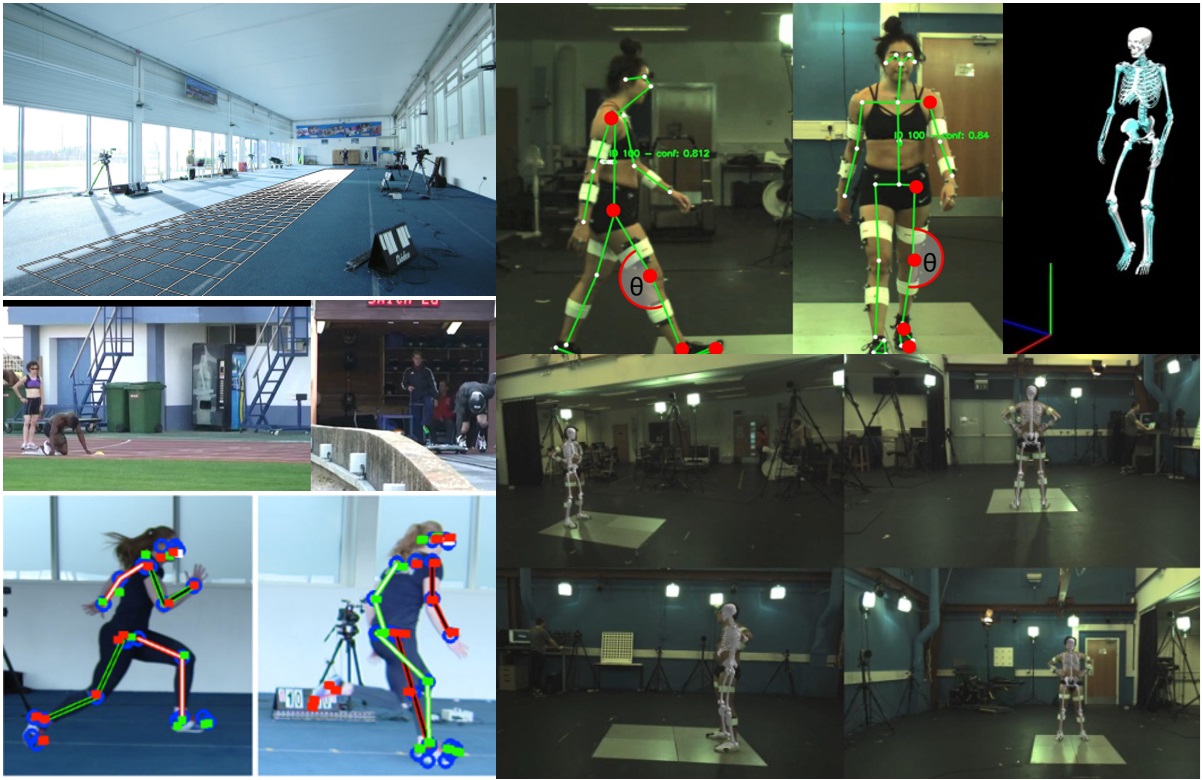

In 2015 I founded and was Director of the Centre for the Analysis of Motion, Entertainment Research and Applications (CAMERA), growing the centre from 0 to over 50 people, and raising over £20m in funding from the UK Research Council (EPSRC, AHRC), including contributions from partners including The Imaginarium, The Foundry, British Skeleton, Ministry of Defence and British Maritime Technologies. In 2020 - before stepping down as Director to join Microsoft - I led the successful re-funding of CAMERA for another 5 years (EPSRC) and helped secure the £45m+ MyWorld project in the South West of the UK.

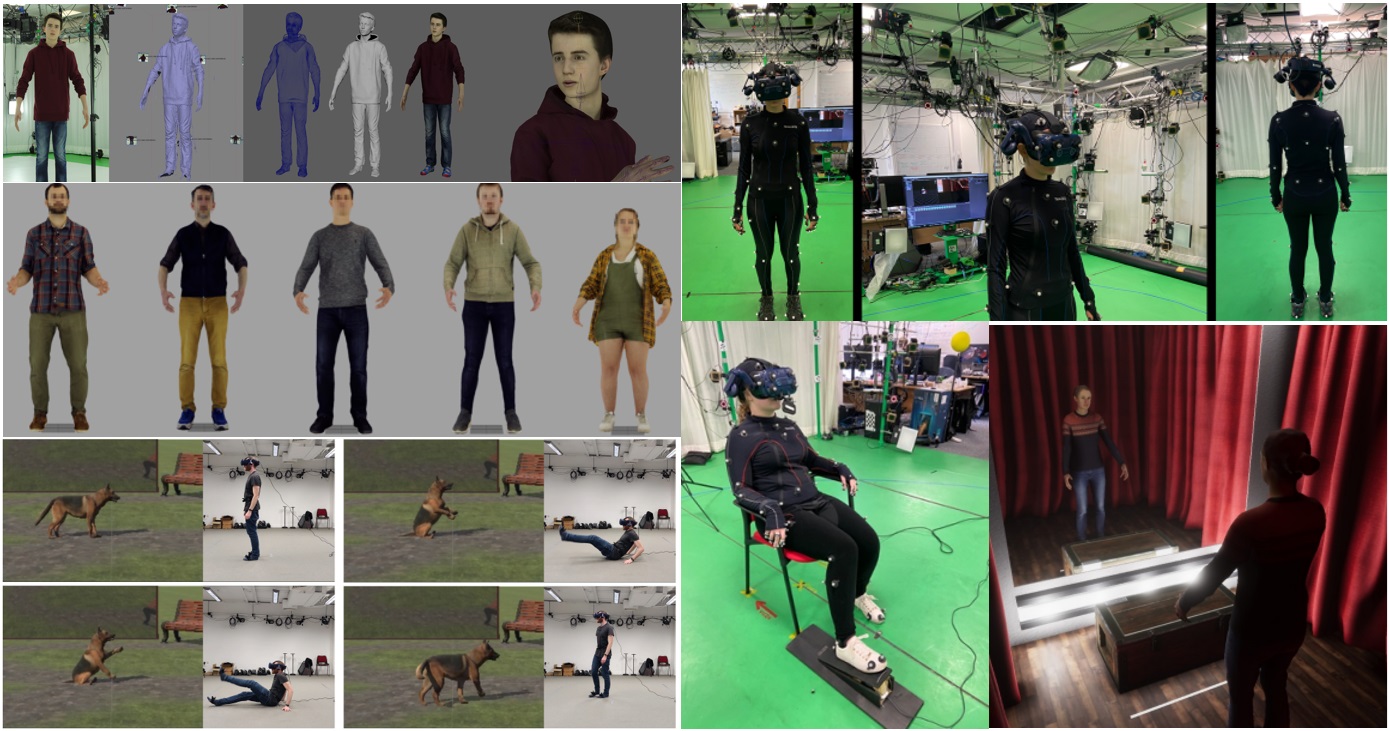

I'm interested in several different areas across research and product engineering (see sections below on Product and Research Impact). However, broadly these interests involve applying computer vision, graphics, generative AI and animation science and engineering to problems in Mixed Reality (Avatars, Presence and Communication), Motion Capture, Video Game Animation, Visual Effects and Biomechanics.

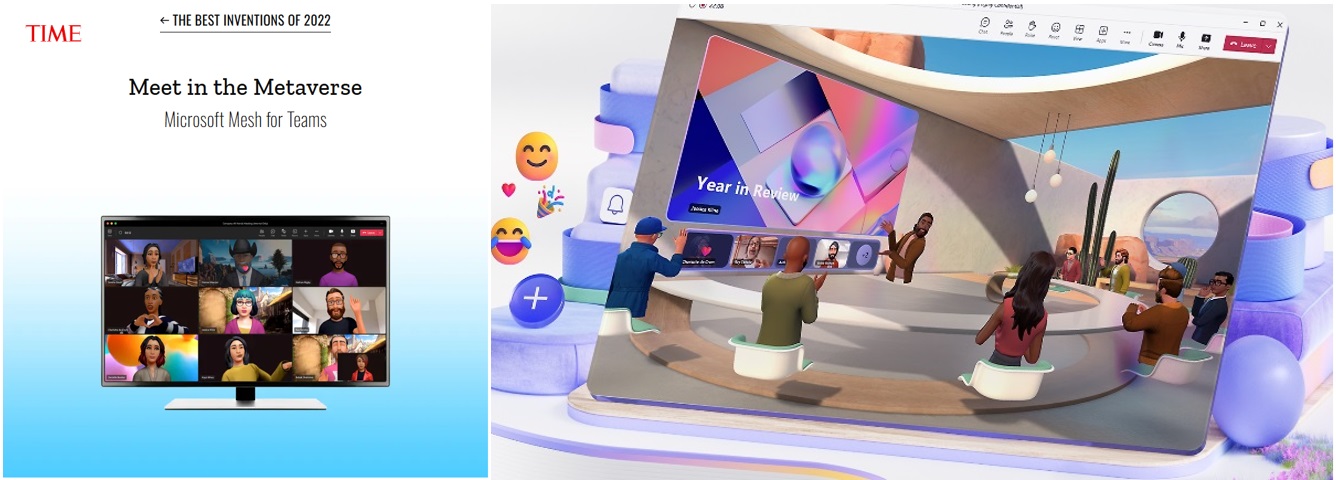

At Microsoft I have been fortunate to ship my work into the hands of millions of customers through Microsoft Mesh and Teams, where myself and my team have created and shipped real-time avatar animation technology from voice and video for remote and immersive meetings. Microsoft Mesh - Mesh is a platform that allows people to connect in virtual worlds - on desktop, or VR. A core part of Mesh are their Avatars - which you can use in immersive 3D meetings, or in standard 2D Teams calls.

At CAMERA I created multi-disciplary research environment with three themes - each converging on technology around human perception, analysis and synthesis: (1) Entertainment, (2) Human Performance Enhancement, and (3) Health & Rehabilitation. Each theme was partnered with industrial partners, giving us real applications and routes to impact.

At CAMERA I also created a framework where we were able to get a large amount of real world impact from our research. We did they by creating production tools from our research with a team of engineers, deploying them into our studio, and then delivering projects - from motion capture to digital humans - to clients that leverage these tools. This resulted in helping ship a number of video games and award winning immersive experiences. Below is a snapshot of some of the shipped products and expreiences I have been fortunate enough to be involved in as lead or part of a wider team.

|

|

|

| 11:11 Memories Retold - with Aardman and Bandai Namco (BAFTA nominated). We delivered all motion capture for the video game at our CAMERA studio (available on Playstation, XBox, PC) . | 'Is Anna OK?' - with BBC and Aardman. An immersive experience delivered with our in-house facial rigging, animation and motion capture solution. | Cosmos Within Us - with Satore Studios (Cannes Lion Winner). We build digital doubles for performers and animated them using our in house tools. |

Research Impact: From Digital Humans to Biomechanics and Human Perception

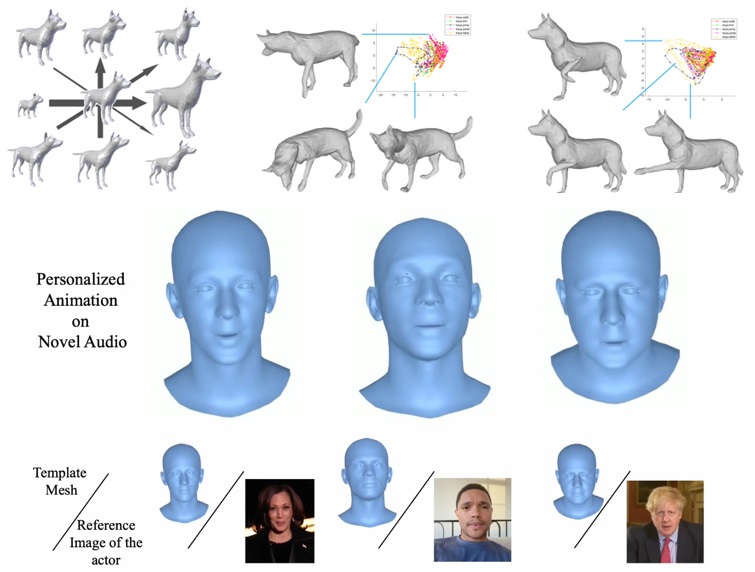

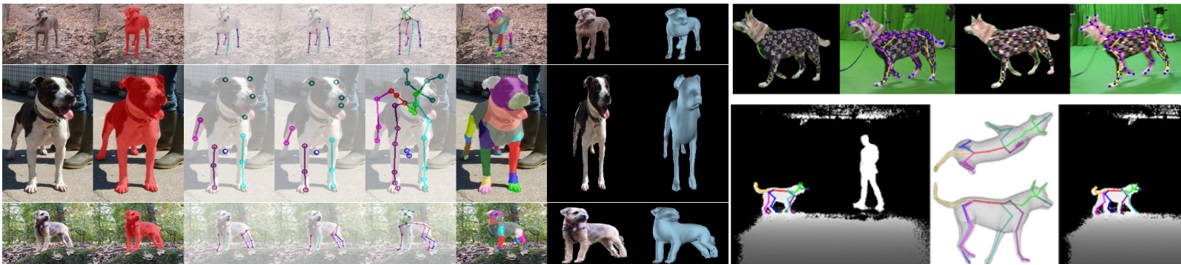

My research has spanned several areas over the years - building digital humans, motion capture of faces, bodies and animals, animation, computer vision, biomechanics.. One of the central themes though has been motion - understanding this, measuring it, and finding ways to use this for different scenarios and applications. A list of my papers can be found on my Google Scholar page (I don't maintain an up to date one here anymore). However, my research broadly has fallen into the following themes.

As a Professor one of the core activities in funding research activites. The primary way to do this is through government funded research councils. In the UK, the major body is UKRI, under which vertical activies are funded - e.g. EPSRC (Engineering, Physical Sciences - including Computer Science), and AHRC (Arts and Humanities). Below is a list of the major funding I recieved for my academic research - used to fund large scale research centres (e.g. CAMERA) and more targeted short-term activities.

(PI Uni. of Bath) 2021-2026: MyWorld (~£45m FEC). UKRI

(PI) 2020-2025: CAMERA 2.0 - Centre for the Analysis of Motion, Entertainment Research and Applications (£4,151,614 FEC). EPSRC

(PI) 2019-2021: CAMERA Motion Capture Innovation Studio (£901,391) Horizon 2020

(PI) 2019-2022: A tool to reveal Individual Differences in Facial Perception (£402,113) Medical Research Council (MRC)

(PI) 2018-2020: Rheumatoid Arthritis Flare Profiler (£165,126, Total project value £663,290). Partners: Living With, NHS. InnovateUK

(Co-I) 2018-2022: Bristol and Bath Creative Cluster (~£4m). Partners: UWE, University of Bristol, Bath Spa University. AHRC

(PI) 2017-2019: DOVE: Deformable Objects for Virtual Environments (£128,746, Total project value £562,559 FEC). Partner: Marshmallow Laser Feast, Heston's Fat Duck. Innovate UK

(PI) 2016-2018: HARPC: HMC for Augmented Reality Performance Capture (£119,025, Total project value £517,616 FEC). Partner: The Imaginarium. Innovate UK

(PI) 2015-2020: Centre for the Analysis of Motion, Entertainment Research and Applications - CAMERA (£ 4,998,728 FEC). Partners: The Imaginarium, The Foundry, Ministry of Defence, British Maratime Technologies, British Skeleton. EPSRC/AHRC. (not including partner contributions, ~£5,000,000).

(PI) 2015-2017: Biped to Animal (£108,109 FEC). Parter: The Imaginarium. Innovate UK.

(PI) 2015: Goal Oriented Real Time Intelligent Performance Retargeting (£29,997 FEC). Partner: The Imaginarium. Innovate UK.

(Co-I) 2013-2016: Acquiring Complete and Editable Outdoor Models from Video and Images (£1,003,256 FEC). EPSRC.

(PI-Bath) 2014-2017: Visual Image Interpretation in Man and Machine (VIIMM) (£121,030 FEC). Partner: University of Birmingham. EPSRC

(PI) 2012-2016: Next Generation Facial Capture and Animation (£100,887 FEC). Partner: Double Negative Visual Effects. The Royal Society Industry Fellowship.

(PI) 2007-2012: Exploiting 4D Data for Creating Next Generation Facial Modelling and Animation Techniques (£460,640FEC). The Royal Academy of Engineering Research Fellowship.

Other funding: PhD Studentships, EPSRC Innovation Acceleration Account (IAA), Nuffield Foundation.

Over the years I have collected and made public several datasets. Below are the major ones still in use today.

RGBD-Dog RGBD-Dog contains motion capture and multiview (Sony) RGB and (Kinect) RGBD data for several dogs performing different actions (all cameras and mo-cap syncronised with calibration data included. You can get the data, code to view and the CVPR 2020 paper it is all based on from our GitHub page. In our CVPR 2020 paper we use the data to train a model to predict dog pose from RGBD data. However, it also works pretty well on other animals. In the future we will expand the data and code as we publish more of our research.

D3DFACS The D3DFACS Dataset contains over 500 FACS coded dynamic 3D (4D) sequences from 10 individuals - including 3D meshes, stereo UV maps, colour camera images and calibration files. You can find out more about it in our ICCV 2011 paper "A FACS Valid 3D Dynamic Action Unit Database with Applications to 3D Dynamic Morphable Facial Modelling". If you would like to download the dataset for academic research, please visit the data set website

Shadow Removal Ground Truth and Evaluation To encourage the open comparison of single image shadow removal in community, we provide an online benchmark site and a dataset. Our quantitatively verified high quality dataset contains a wide range of ground truth data (214 test cases in total). Each case is rated according to 4 attributes, which are texture, brokenness, colourfulness and softness, in 3 perceptual degrees from weak to strong. To access the evaluation website, please visit here.

At Microsoft I lead a team of amazingly talented scientists and engineers. But as a Professor and previous Director of CAMERA I have also fortunate to work with some amazing students, researchers and engineers.

Martin Parsons (CAMERA), Murray Evans (CAMERA), Yiguo Qiao (Living With/RUH/InnovateUK), Jack Saunders, George Fletcher, Jake Deane, Kyle Reed (Cubic Motion); Jose Serra (Digital Domain/ ILM), Anamaria Ciucanu (MMU), Pedro Mendes, Shridhar Ravikumar (Amazon, Apple); Alastair Barber (The Foundry); Wenbin Li (Bath); Han Gong (Apple); Charalampos Koniaris (Disney Research); Daniel Beale; Sinan Mutlu (Framestore); Nicholas Swafford; Nadejda Roubtsova (CAMERA); Sinead Kearney (CAMERA); Maryam Naghizadeh; Catherine Taylor (Marshmallow Laser Feast)

I love my work, but of course the number one thing in my life is my family. If anything, having a family motivates me even more in my work - giving me the desire to make sure we all have the best life! Having a family also forces you to be much more efficient and productive with the time that you are working - and of course, appreciate the precious time you have with each other even more.